Make no mistake, it will eventually. But even behemoths as Amazon, Microsoft et al struggle with the simple question: “what will we use it for?”

The Cambrian explosion of news from the AI space can easily give the impression that ground breaking AI solutions are just around the corner. That is not exactly true, impressive AI solutions exist in the lab. But the leap to practical applications is bigger than we think. And the reason why has to do with the 70% problem paradox.

You have probably heard of the 80/20 rule. The 70% paradox follows similar logic, it just hits serious constraints a bit quicker.

If we were to ask, “How well are AI solutions faring in practical applications?” then the place to study would be in the emerging areas AI is being used. One really good field of study is using AI in software development.

Learnings from AI in software development

Software development is a fast-paced, rapidly evolving area where whatever makes you more effective usually is rewarded with an exponential adoption curve. AI powered software developer tools have existed for years. The general use cases can be grouped in:

- Fast prototyping – from sketch to code in minutes

- Refactoring support

- Bug tracking and resolution

- Code generation through prompt

The hidden cost of AI speed

A reasonable assumption would be that Junior developers would have the best use of AI development. But the opposite is true, it’s senior developers that reap the biggest gains. That’s odd!

It turns out senior and junior developers both use AI, but they use them differently. Junior developers use AI to generate code. Getting an MVP up and running in minutes is a breeze.

Senior developers use AI differently. They use AI as a tool to generate knowledge. Senior developers use AI to generate code, but they do it iteratively, make sure they can debug it and make sure they understand the code generated. They carefully add edge cases and polish the testability.

The 70% paradox in software development becomes apparent when you use AI to solve a bug. Which in turn produces two new bugs which you ask AI to solve… …and the Sisyphus work has just started to reveal itself.

The main challenge of software development is not generating code. It’s maintaining and evolving it. Senior developers know this; therefore they never pass over full control to AI. They carefully scrutinize the code generated until it’s both understandable and maintainable to other developers.

Learning from real life applications

Two natural use cases for Generative AI are

- Summarizing large bodies of information and

- AI chatbots

So how are they doing in real life applications?

Nabla is using OpenAI’s Whisper to transcribe medical conversations. A group of researchers from Cornell University and the University of Washington studied the application.

According to the researchers, “While many of Whisper’s transcriptions were highly accurate, we find that roughly one percent of audio transcriptions contained entire hallucinated phrases or sentences which did not exist in any form in the underlying audio… 38 percent of hallucinations include explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

“The researchers found that the AI-added words could include invented medical conditions or phrases you might expect from a YouTube video, such as “Thank you for watching!””

Generative AI struggle with hallucination. The question to ask would be “do we accept an error rate of 1% for the benefit of faster transcription?” For trivia applications, yes. For situations that could include being wrongly labelled in the social welfare system, financial instability, injury or loss of life – no. The hidden problem is actually worse than any inflicted injury. The possibility for any individual to prove an automatic algorithm did wrong is slim to impossible.

Imagine for a moment that a function in the social welfare system decided to apply AI. The model hallucinates and (wrongly) labels you as “financial criminal in debt”. Your bank then blocks access to your account and routes your pay check to a debt clearing house. As you panic watching financial meltdown happening in front of your eyes, you pull yourself together and contact a lawyer to set things straight. “I did nothing wrong” you explain (which is true).

Now, the worst is yet to come: the legal system put in place to safeguard basic human rights is actually toothless. When all parties swear they did nothing wrong, it daunts you – the only way to prove your innocence is to prove that the algorithm did wrong. An impossible catch-22 situation. Even if you would have access to all the data, the method, the data centers, and the data scientists, given the opaqueness of an AI model, the best result you could hope for is “we can show that our AI model produces this output given this data, but we aren’t sure how it makes the decision”. Plausible, but still way too far from certain. Let us put it this way, you are f**ked. You think scenarios similar to this haven’t already happened? Think again.

The above scenario demonstrates the implication of the 70% paradox. It’s far from just a technical problem. It’s way more serious than that. It is humbling to read OpenAI’s policy on using Whisperer:

“Our usage policies prohibit use in certain high-stakes decision-making contexts, and our model card for open-source use includes recommendations against use in high-risk domains.”

The silent shift of power

The answer is obviously not to stop the development of AI solutions. Or assuming that the alternative is flawless (humans make errors too). There is however a big difference between the two. The legal and social systems in our society have been tuned over 1000 years to deal with human responsibility. There is nothing equivalent in the AI space.

A big part of addressing the 70% problem paradox is realizing that it is not only a tech problem, it’s an intertwined legal and ethical problem. Any attempt to address one dimension without considering the other will result in stepwise loss of public trust. It’s not the path forward.

We must openly talk about this simple fact: AI solutions mean a silent shift of power from individual rights to the companies developing the AI solution algorithms. What do we need to be able to trust their process? How can a similar level of transparency be put in place for algorithms as for human decision making?

Until now, tech companies have gone out of their way to downplay, ignore or dodge the vacuum that automated decision making creates. That is not the way forward. Ethics and transparency of the process are crucial elements. In parallel, legal frameworks need to catch up. This initiative here must come from governments.

So what can we do?

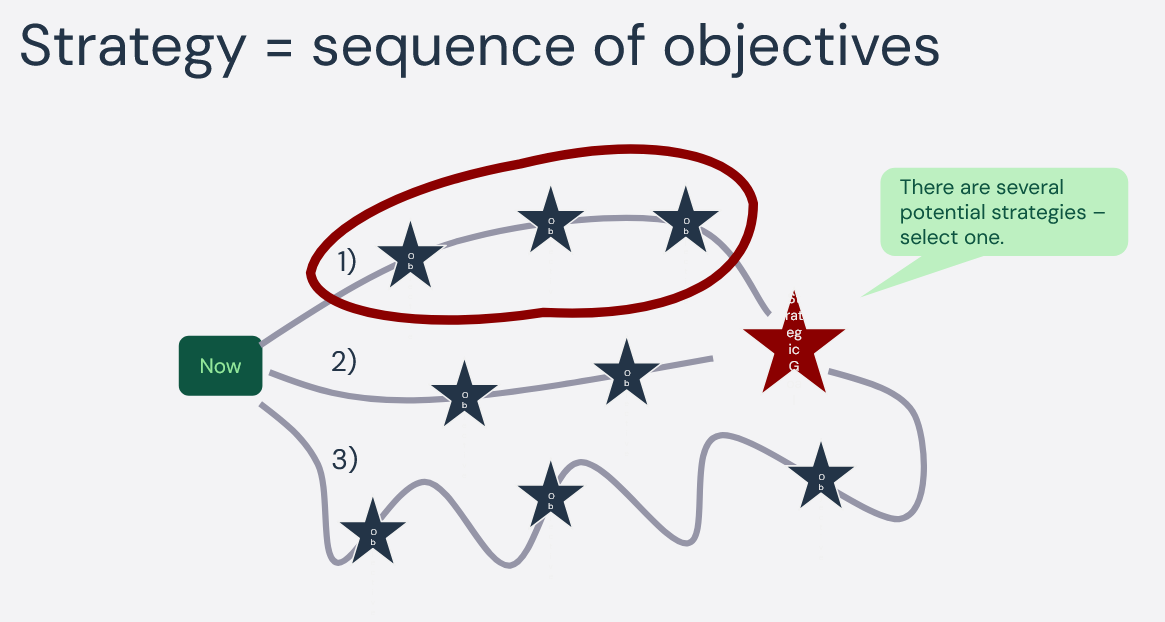

The first advice is never to give up complete control to an AI solution. Blind faith is just that – blind. Any AI solution deployed must be reproducible (produce consistent result given similar input). The process that produced the AI solution must be transparent (the dataset, method, hyper-parameters etc). The responsibility for this falls heavily on the companies developing the solutions.

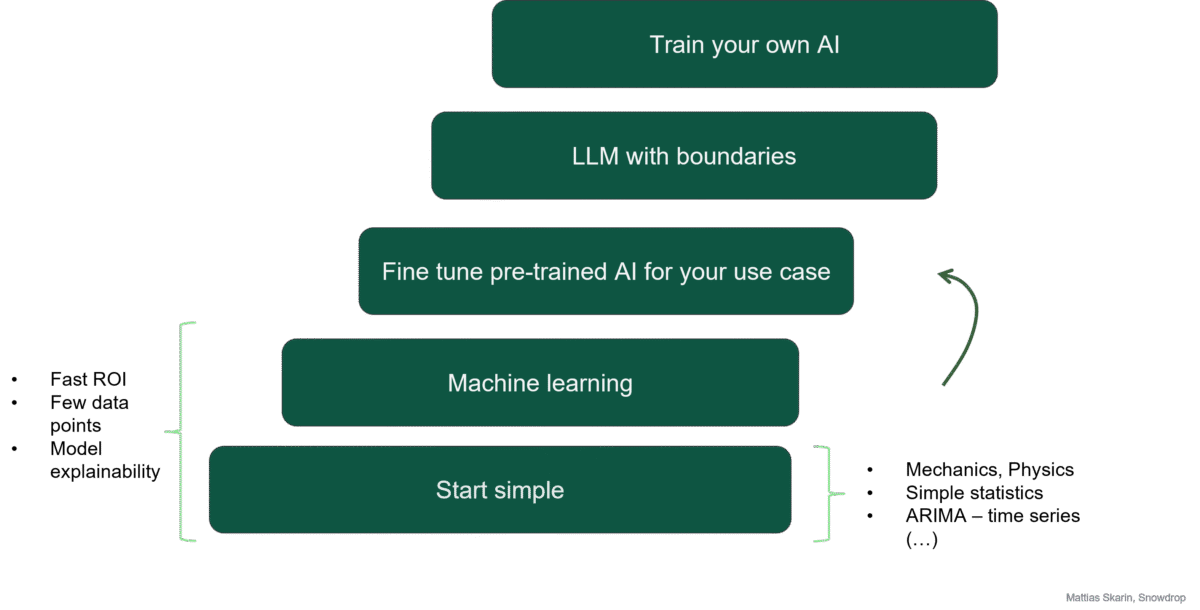

The first thing to do is to understand how AI development is done. Armed with this knowledge, we can ask relevant questions when setting up the process, we can bring transparency to it, we can shape it. Without this knowledge, we are lost and won’t even know what questions to ask.

What do we do @ Snowdrop you may ask? Well, it is for this reason we run the “AI for developers course” for example.

Prompt engineering won’t help you overcome the 70% problem paradox.

The 70% problem paradox in a nutshell

Covering 70 percent of the use cases can be done relatively easily. Covering the other 30 percent is where effort is needed. Ask automated delivery bots about the last mile problem.

Ask the self-driving cars about driving in poor visibility, to detect the difference between a kangaroo and an elk, or knowing the difference between a cone on the hood or one demarcating a roadside construction. The result is the same. It’s damn hard.

The main reason we haven’t stumbled upon the 70% paradox yet is simple: AI solutions haven’t yet made it out in real life applications in vast numbers. And if they are out there, they are still under human supervision.

What we should do is to quickly learn from the fields where AI is already practically put in use.

References

”AI & the power over decision making (in Swedish)”, Fjaestad Maja, Vinge Simon, Volante Förlag 2024

“Arrested by AI: Police ignore standards after facial recognition matches“, Washington Post 2025-01-13

“His girlfriend died. Now Dillon is fighting against Tesla” (in Swedish), Svd 2024-05-11

“Hospitals use a transcription tool powered by an error-prone OpenAI model”, The Verge 2024

“The AI Trap – The Cycle of Shiny New Technologies”, Substack 2024.12-18

“The 70% problem: Hard truths about AI-assisted coding”, Osmani Addy 2024